Network Policy Reference Examples

Collection of network policy scenarios from various sources for CKA preparation.

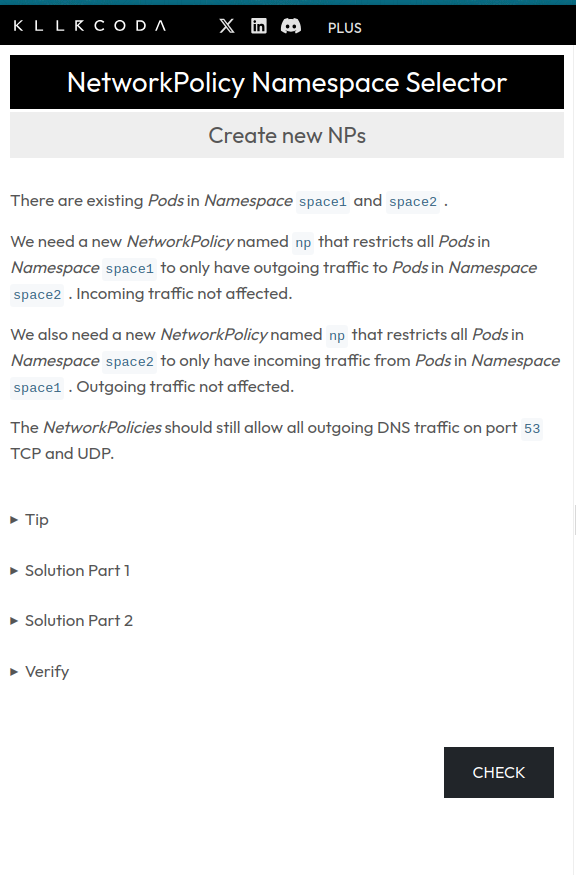

Question 1: Namespace Communication

Source: https://killercoda.com/killer-shell-cka/scenario/networkpolicy-namespace-communication

Setup

bash

kubectl create ns space1

kubectl create ns space2Network Policies

Egress Policy (space1):

yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

namespace: space1

name: np

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: space2

- podSelector: {}

- ports:

- protocol: TCP

port: 53

- protocol: UDP

port: 53Apply:

bash

kubectl apply -f - <<EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

namespace: space1

name: np

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: space2

- podSelector: {}

- ports:

- protocol: TCP

port: 53

- protocol: UDP

port: 53

EOFIngress Policy (space2):

yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ns

namespace: space2

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: space1Apply:

bash

kubectl apply -f - <<EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ns

namespace: space2

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: space1

EOFTesting

bash

# create test pods with proper networking tools

kubectl run tester -n space1 --image=curlimages/curl --restart=Never -- sleep 3600

kubectl run server -n space2 --image=nginx --restart=Never

kubectl run test2 -n space2 --image=curlimages/curl --restart=Never -- sleep 3600

# wait until all pods are running

kubectl get pods -n space1

kubectl get pods -n space2

# get server pod IP

kubectl get pod server -n space2 -o wide

# 1. DNS egress test from space1 (ALLOWED by policy)

kubectl exec -n space1 tester -- nslookup kubernetes.default.svc.cluster.local

# 2. space1 -> space2 HTTP access (ALLOWED by both policies)

kubectl exec -n space1 tester -- curl http://10.244.0.21

# 3. space2 -> space1 access (DENIED: no ingress policy in space1)

kubectl exec -n space2 test2 -- curl --connect-timeout 5 http://tester.space1.svc.cluster.local

# 4. other namespace -> space2 access (DENIED: ingress restricted to space1 only)

kubectl run outsider --image=curlimages/curl --restart=Never -- sleep 3600

kubectl exec outsider -- curl --connect-timeout 5 http://10.244.0.21Cleanup

bash

kubectl delete pod tester -n space1 --force --grace-period=0

kubectl delete pod test2 -n space2 --force --grace-period=0

kubectl delete pod server -n space2 --force --grace-period=0

kubectl delete pod outsider --force --grace-period=0

kubectl delete networkpolicy np -n space1

kubectl delete networkpolicy ns -n space2

kubectl delete ns space1

kubectl delete ns space2Verification Commands

bash

# these should work

k -n space1 exec app1-0 -- curl -m 1 microservice1.space2.svc.cluster.local

k -n space1 exec app1-0 -- curl -m 1 microservice2.space2.svc.cluster.local

k -n space1 exec app1-0 -- nslookup tester.default.svc.cluster.local

k -n kube-system exec -it validate-checker-pod -- curl -m 1 app1.space1.svc.cluster.local

# these should not work

k -n space1 exec app1-0 -- curl -m 1 tester.default.svc.cluster.local

k -n kube-system exec -it validate-checker-pod -- curl -m 1 microservice1.space2.svc.cluster.local

k -n kube-system exec -it validate-checker-pod -- curl -m 1 microservice2.space2.svc.cluster.local

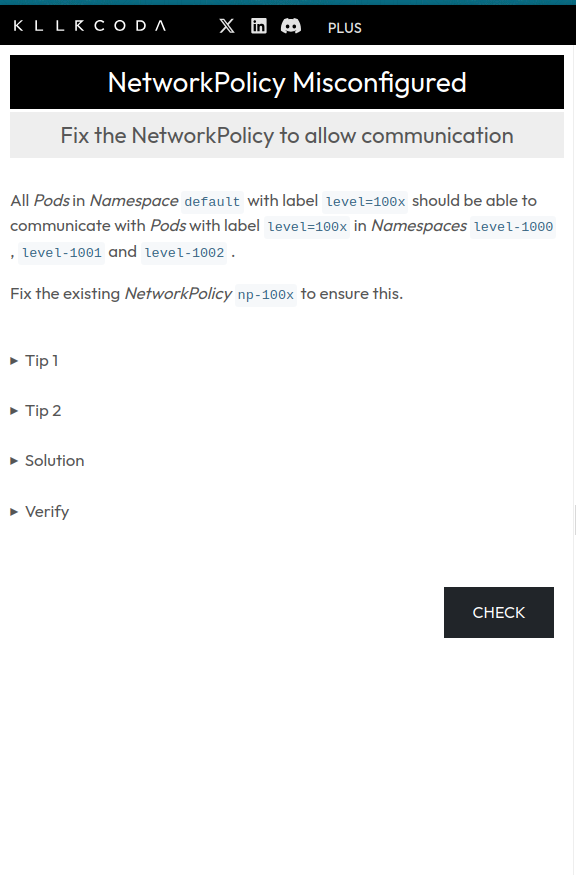

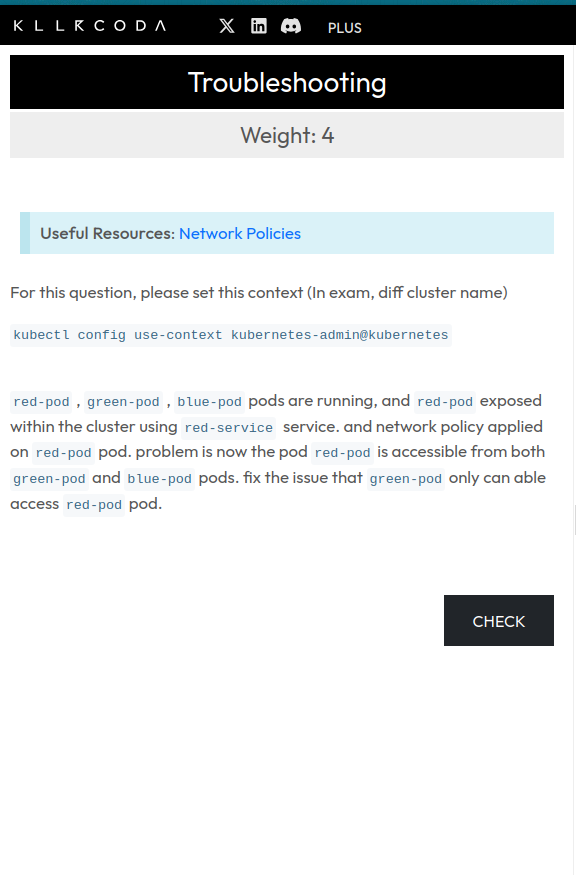

k -n default run nginx --image=nginx:1.21.5-alpine --restart=Never -i --rm -- curl -m 1 microservice1.space2.svc.cluster.localQuestion 2: Misconfigured Network Policy

Source: https://killercoda.com/killer-shell-cka/scenario/networkpolicy-misconfigured

Network Policy

yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: np-100x

namespace: default

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values: ["level-1000", "level-1001", "level-1002"]

- ports:

- protocol: TCP

port: 53

- protocol: UDP

port: 53Apply and Test

bash

kubectl apply -f - <<EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: np-100x

namespace: default

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values: ["level-1000", "level-1001", "level-1002"]

- ports:

- protocol: TCP

port: 53

- protocol: UDP

port: 53

EOF

# Create test namespaces and servers

for ns in level-1000 level-1001 level-1002; do

kubectl create ns $ns

kubectl run server --image nginx -n $ns

done

# Test connectivity

kubectl exec tester-0 -- curl tester.level-1000.svc.cluster.local

kubectl exec tester-0 -- curl tester.level-1001.svc.cluster.local

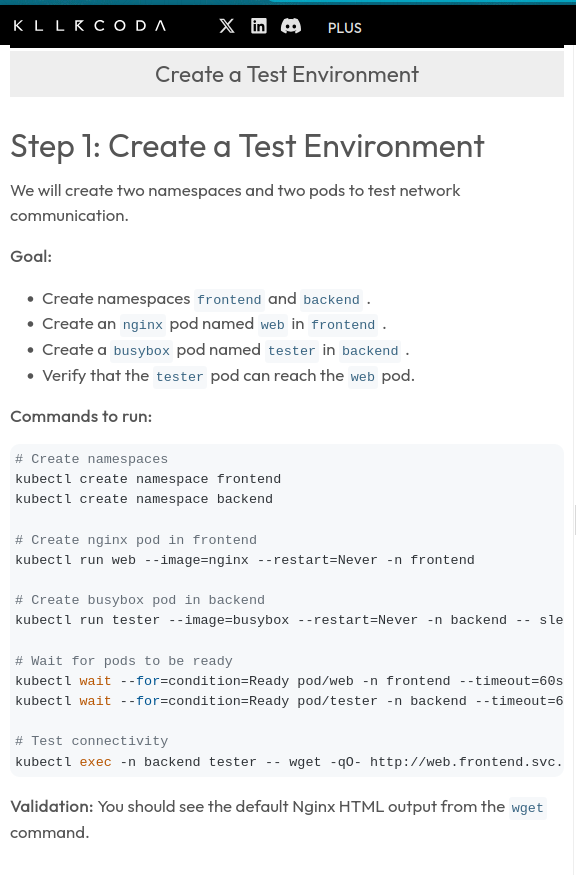

kubectl exec tester-0 -- curl tester.level-1002.svc.cluster.localQuestion 3: Frontend/Backend Network Isolation

Source: https://killercoda.com/alexis-carbillet/course/CKA/network-policies

Setup

bash

# Create namespaces

kubectl create namespace frontend

kubectl create namespace backend

# Create nginx pod in frontend

kubectl run web --image=nginx --restart=Never -n frontend

# Create busybox pod in backend

kubectl run tester --image=busybox --restart=Never -n backend -- sleep 3600

# Wait for pods to be ready

kubectl wait --for=condition=Ready pod/web -n frontend --timeout=60s

kubectl wait --for=condition=Ready pod/tester -n backend --timeout=60s

# Test connectivity

kubectl exec -n backend tester -- wget -qO- http://10.244.0.6

kubectl exec -n backend tester -- nslookup web.frontend.svc.cluster.localNetwork Policy

yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-external

namespace: frontend

spec:

podSelector:

matchLabels:

app: web

policyTypes:

- Ingress

ingress:

- from:

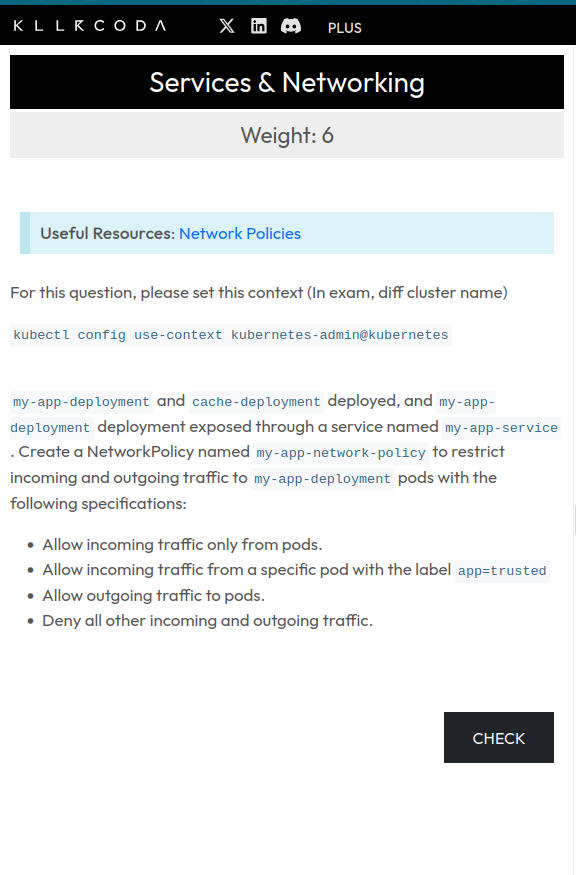

- podSelector: {}Question 4: Ingress and Egress Policy

Source: https://killercoda.com/sachin/course/CKA/network-policy

Network Policy

yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-app-network-policy

namespace: default # Policy applies in the default namespace

spec:

# Selects the pods this policy applies to

# Only pods with label app=my-app are affected

podSelector:

matchLabels:

app: my-app

# This policy controls both ingress and egress traffic

policyTypes:

- Ingress

- Egress

# Ingress rules (incoming traffic to my-app pods)

ingress:

# Rule 1: allow ingress from ALL pods in the SAME namespace

- from:

- podSelector: {} # {} means "all pods"

# Rule 2: allow ingress only from pods labeled app=trusted

# (also within the same namespace)

- from:

- podSelector:

matchLabels:

app: trusted

# Egress rules (outgoing traffic from my-app pods)

egress:

# Allow egress to ALL pods in the SAME namespace

- to:

- podSelector: {} # {} means "all pods"Question 5: Network Policy Troubleshooting

Source: https://killercoda.com/sachin/course/CKA/network-policy-issue

Initial State (Broken)

bash

controlplane:~$ k get netpol

NAME POD-SELECTOR AGE

allow-green-and-blue run=red-pod 99s

controlplane:~$ k get netpol -o yaml

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"NetworkPolicy","metadata":{"annotations":{},"name":"allow-green-and-blue","namespace":"default"},"spec":{"ingress":[{"from":[{"podSelector":{"matchLabels":{"run":"green-pod"}}},{"podSelector":{"matchLabels":{"run":"blue-pod"}}}]}],"podSelector":{"matchLabels":{"run":"red-pod"}},"policyTypes":["Ingress"]}}

creationTimestamp: "2026-01-17T12:05:12Z"

generation: 1

name: allow-green-and-blue

namespace: default

resourceVersion: "2978"

uid: 7b384cd5-63a7-4a55-acc2-6206ecce73e6

spec:

ingress:

- from:

- podSelector:

matchLabels:

run: green-pod

- podSelector:

matchLabels:

run: blue-pod

podSelector:

matchLabels:

run: red-pod

policyTypes:

- Ingress

kind: List

metadata:

resourceVersion: ""Fix Applied

bash

controlplane:~$ k edit netpol

networkpolicy.networking.k8s.io/allow-green-and-blue editedFixed State

bash

controlplane:~$ k get netpol -o yaml

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"NetworkPolicy","metadata":{"annotations":{},"name":"allow-green-and-blue","namespace":"default"},"spec":{"ingress":[{"from":[{"podSelector":{"matchLabels":{"run":"green-pod"}}},{"podSelector":{"matchLabels":{"run":"blue-pod"}}}]}],"podSelector":{"matchLabels":{"run":"red-pod"}},"policyTypes":["Ingress"]}}

creationTimestamp: "2026-01-17T12:05:12Z"

generation: 2

name: allow-green-and-blue

namespace: default

resourceVersion: "3196"

uid: 7b384cd5-63a7-4a55-acc2-6206ecce73e6

spec:

ingress:

- from:

- podSelector:

matchLabels:

run: green-pod

podSelector:

matchLabels:

run: red-pod

policyTypes:

- Ingress

kind: List

metadata:

resourceVersion: ""